Data replication takes data from your source databases — Oracle, MySQL, Microsoft SQL Server, PostgreSQL, MongoDB, etc. — and copies it into your destination data warehouse. After you have identified the data you want to bring in, you need to determine the best way to replicate the data so it meets your business needs.

Choosing the right method

The method you choose impacts the end state of your data. Fortunately, there are data replication methods built to integrate with today’s data warehouses and suit a variety of use cases. At Etlworks, we believe in providing users with as much flexibility as possible. Let’s discuss each of the five methods of data replication and outline the option that may be best for you.

High Watermark (HWM)

The concept of Watermark refers to a flood after-match in which you look at the water stains in a wall to figure how high the water got, which is pretty much what we want to do: figure out which was the last item we updated and move from there on. Therefore, Watermark is a tool to simplify querying for updated objects, which is a very common use case when synchronizing data.

Pros:

- fast

- works for all data sources, including all databases, files, and APIs

Cons:

- does not support deletes

- requires a dedicated high watermark field in each table

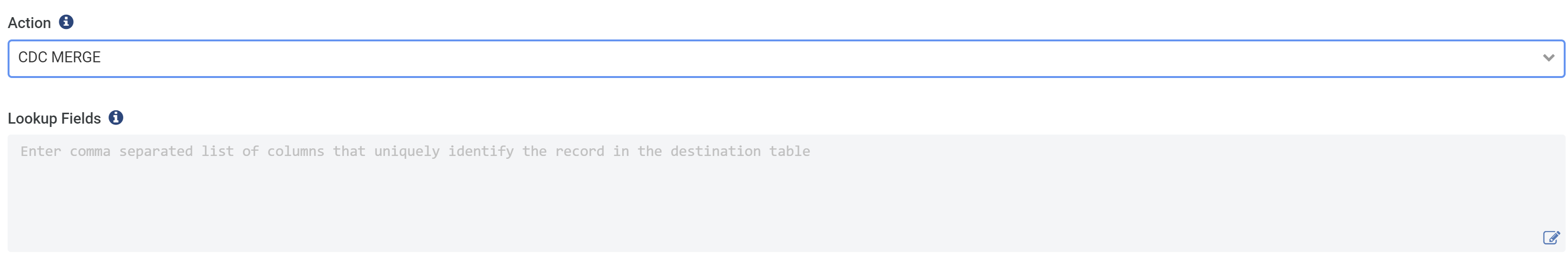

Change Data Capture (CDC)

CDC is an approach to data integration that is based on the identification, capture, and delivery of the changes made to the source database and stored in the database ‘redo log’, also called ‘transaction log’. CDC or Log Replication is the fastest and most reliable way to replicate. It involves querying your database’s internal change log every few seconds, copying the changes into the data warehouse, and incorporating them frequently. CDC is the best method for databases that are being updated continually and fully supports deletes.

Pros:

- fast

- no polling from database tables – uses database redo log instead

- supports deletes

- enables near real-time replication

Cons:

- currently supports only Postgres, MySQL, SQL Server, and Oracle

- some older versions of the databases above do not support CDC

- requires extra setup in the source database

Database Triggers

Trigger-based change replication can be implemented in many ways but the basic idea is that each table, which participates in a change replication as a source, has triggers for INSERT, UPDATE, and optionally DELETE. The triggers update the shadow table (or tables). The shadow tables may store the entire row to keep track of every single column change, or only the primary key is stored as well as the operation type (insert, update or delete).

Pros:

- works for any source database which has triggers

- no extra requirements for the specific version of the database or extra field in each table

Cons:

- requires adding triggers to all database tables

- triggers can negatively impact performance

Real-time CDC with Kafka

Apache Kafka is a popular technology to share data between systems and build applications that respond to data events. Etlworks completes Apache Kafka solutions by delivering high-performance real-time data integration.

Etlworks parses the CDC events emitted to the Kafka topic, automatically transforms events to the DML SQL statements (INSERT/UPDATE/DELETE), and executes SQL statements in the target database in the order they were created. It also handles the collisions and errors, ensuring that the solution is 100% reliable.

Pros:

- fast

- no polling from database tables

- supports deletes

- supports real-time replication

Cons:

- complex setup (requires Kafka, Zookeeper, Kafka Connect, and Debezium)

- supports only Postgres, MySQL, SQL Server, Oracle, and MongoDB

- some older versions of the databases above do not support CDC

- requires extra setup in the source database

Full refresh

Sometimes the simplest approach is the best. Full refresh replication method is best for small tables, static data, or one-time imports. Because it takes time to perform the full refresh, it’s a typically slower method than the others.

Pros:

- the simplest to setup

- can be quite fast for the relatively small datasets (<100K records)

- works for all data sources

Cons:

- not recommended for large datasets

Want to learn more about our replication options and what’s best for your data? Talk to us!