An API integration is the connection between two or more applications, via their APIs, that lets those systems exchange data. API integrations power processes throughout many high-performing businesses that keep data in sync, enhance productivity, and drive revenue.

What is an API?

An API is a set of definitions and protocols for building and integration application software. API stands for application programming interface.

An API is like a building block of programming that helps programmers avoid writing code from scratch each time it is needed. APIs are organized sets of commands, functions, and protocols that programmers use to develop software.

Consider building something with a box of Lego bricks. Instead of creating or carving a new block each time you need one, you simply choose from an assortment that are prepared and ready to be plugged into your project. Each block is designed to connect with other blocks in order to speed up the building process. In essence, that’s how an API works. APIs streamline and boost efficiency everywhere they are used.

The most commonly-used API for web services: REST

REST stands for Representational State Transfer. Where a regular API is a essentially a set of functions that allow communication between applications, a REST API is a architectural style for connected applications on the web.

In fact, today 70% of public APIs depend on REST services. REST APIs offer more flexibility, a gentler learning curve, and work directly from an HTTP URL rather than relying on XML.

How do REST APIs work?

At their simplest form, REST APIs for web services usually involve the following parties:

- Your web-based, API-enabled application

- Remote server

- Specific data request

- Returned data/function

While there are many different flavors of software and many different flavors of server, REST APIs act as a standardized wrapper to help your API-enabled applications successfully communicate with online servers to make information requests.

Now, how do API integrations work?

The term API integration can be defined as the process of creating a means for two or more APIs to share data and communicate with each other without human interruption. It involves the use of APIs to enable communication between two web tools or applications. It allows organizations to automate their systems, enhance the seamless sharing of data, and integrate current applications.

API integration has become pivotal in the modern world due to the explosion of cloud-based products and apps. As such, organizations need to create a connected system where data will be relayed flawlessly between various software tools without the need to do it manually. API integration has proved to be the much-needed solution as it allows the sharing of process and enterprise data among applications in a given ecosystem. It improves the flexibility of information and service delivery, as well as makes the embedding of content from different sites and apps easy. An API acts as the interface that permits the integration of two applications.

Building API integration into your application or workflow allows you to make the most of your data by employing it where it will be most useful. Whether this means using it for analytics or as content within your software or applications, integration keeps you connected to your business information.

Getting started with APIs

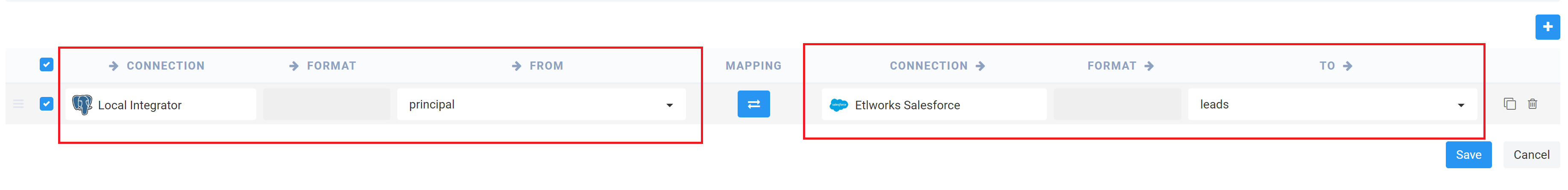

Data integration using API technology doesn’t have to be daunting. Etlworks Integrator uses API-led connectivity to create an application network of apps, data, and devices, both on-premises and in the cloud. It provides developers with a complete toolkit to enhance scalability, simplify mapping and security, and for managing, preparing, and profiling all of your company’s data. Whether you want to build event-driven data integration flows or incorporate Integrator into your own automation workflow, you can use the developer features below to achieve your goals.

See how Etlworks can help you make the most of API with a free demo of Data Integration Platform.